[DSP] W02 - Vector Spaces

contents

discrete signal vectors

- a generic discrete signal:

- set of ordered number sequence

- four classes of signals

- finite length

- infinite length

- periodic

- finite support

- digital signal processing:

- signal analysis

- signal synthesis

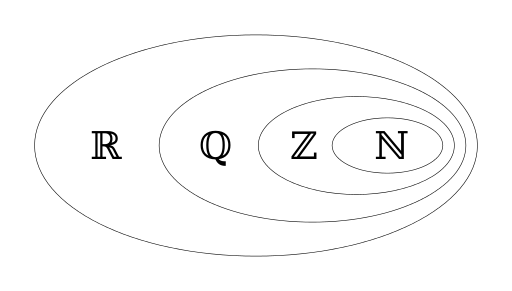

- number sets:

- : natural numbers

- whole numbers

- : integers

- : rational numbers

- inclusive of recurring mantissa

- : irrational numbers

- non-repeating and non-recurring mantissa

- value,

- : real numbers (everything on the number line)

- includes rational and irrational numbers

- : complex numbers

- includes real and imaginary numbers

- : natural numbers

fig: number sets

vector space framework:

- justification for dsp application:

- common framework for various signal classes

- inclusive of continuous-time signals

- easy explanation of Fourier Transform

- easy explanation of sampling and interpolation

- useful in approximation and compression

- fundamental to communication system design

- common framework for various signal classes

- paradigms for vector space application to discrete signals

- object oriented programming

- the instantiated object can have unique property values

- but for a given object class, the properties and methods are the same

- lego

- various unit blocks, same and different

- units assembled in different ways to build different complex structures

- similarly, a complex signal is broken down into a combination of basis vectors for analysis

- object oriented programming

- key takeaways

- vector spaces are general objects

- vector spaces are defined by their properties

- once signal properties satisfy vector space conditions

- vector space tools can be applied to signals

vector spaces

- some vector spaces

- : 2D space

- : 3D space

- : N real numbers

- : N complex numbers

- :

- square-summable infinite sequences

- :

- square-integrable functions over interval

- vector spaces can be diverse

- some vector spaces can be represented graphically

- helps visualize the signal for analysis insights

- :

- :

- both can be visualized in a cartesian system fig: vector in 2D space

- :

- function vector space

- can be represented as sine wave along time fig: L2 function vector

- others cannot be represented graphically:

- for

- for

vector space axioms

- informally, a vector space:

- has vectors in it

- has scalars in it, like

- scalers must be able to scale vectors

- vector summation must work

- formally, (: vector space)

-

- commutative addition

-

- distributive addition

-

- distributive scalar multiplication over vectors

-

- distributive vector multiplication over scalers

-

- associative scalar multiplication

-

- null vector, , exists

- addition of and another vector returns

- summation with null vector is commutative

-

- an inverse vector exists in vector space such that adding the vector with it’s inverse yields the null vector

-

- examples:

- : vector of N real numbers

- two vectors from this space look like:

- the above mentioned rules apply to these vectors and can be verified

- two vectors from this space look like:

- : vector of N real numbers

inner product

- aka dot product: measure of similarity of two vectors

- takes two vectors and outputs a scaler which indicates how similar the two vectors are

- inner product axioms

-

- distributive over vector addition

-

- commutative with conjugation

-

- distributive with scalar multiplication

- when scalar scales the second operand

-

- distributive with scalar multiplication

- conjugate scalar if it scaling the first operand

-

- self inner product ( \in \mathbb{R})

-

- if self inner product is 0, then the vector is the null vector

- if ,

- then and are orthogonal

-

- inner product is computed differently for different vector spaces

- in vector space:

-

- where : angle between and

- when two vectors are orthogonal to each other

- , so , so

-

- in vector space:

-

- norm:

- the inner product of a symmetric and an anti-symmetric function is 0

- i.e. they are orthogonal to each other and cannot be expressed as a factor of the other in any way

- example 1:

- - anti-symmetric

- - symmetric

fig: inner product of a symmetric and an anti-symmetric function

- example 2:

-

norm and distance

- norm of a vector: - inner product of a vector with itself - square of the norm (length) of a vector -

- distance between two vectors:

- the norm of the difference of the two vectors

- the distance between orthogonal vectors is not zero

- in , norm is the distance between the vector end points

- is the difference vector

-

- connects the end points of the vectors and

- see triangle rule of vector addition, and pythagorean theorem

- in , the norm is the mean-squared error:

signal spaces

completeness

- consider an infinite sequence of vectors in a vector space

- if it converges to a limit within the vector space

- then said vector space is “complete”

- also called Hilbert Space

- limiting operation is ambiguous, definition varies from one space to the other

- so some limiting operation may fail and point outside the vector space

- such vector spaces are not said to be complete

common signal spaces

- while vectors spaces can be applied to signal processing

- not all vector spaces can be used for all signals

- different signal classes are managed in different spaces

- : vector space of N complex tuples

- valid signal space for finite length signals

- vector notation:

- where are complex tuples

- also valid for periodic signals

- vector notation:

- all operations are well defined and intuitive

- inner product:

- well defined for all finite-length vectors in ( \mathbb{C}^N)

- valid signal space for finite length signals

- the inner product for infinite length signals explode in - inappropriate for infinite length signal analysis

- : vector space of square-summable sequences - requirement for sequences to be square-summable: - - sum of squares of elements of the sequence is less than infinity - all sequences that live in this space must have finite energy - “well-behaved” infinite-length signals live in - vector notation:

- lot of other interesting infinite length signals do not live in

- examples:

- these have to be dealt with case-by-case

- examples:

basis

- a basis is a building block of a vector space

- a vector space usually has a few basis vectors called bases

- like the lego unit blocks

- any element in a vector space can be

- built with a combination of these bases

- decomposed into a linear combination of these bases

- the basis of a space is a family of vectors which are least like each other

- but they all belong to the same space

- as a linear combination, the basis vectors capture all the information within their vector space

- fourier transform is simply a change of basis

vector families

- : family of vectors - : index of the basis in the family

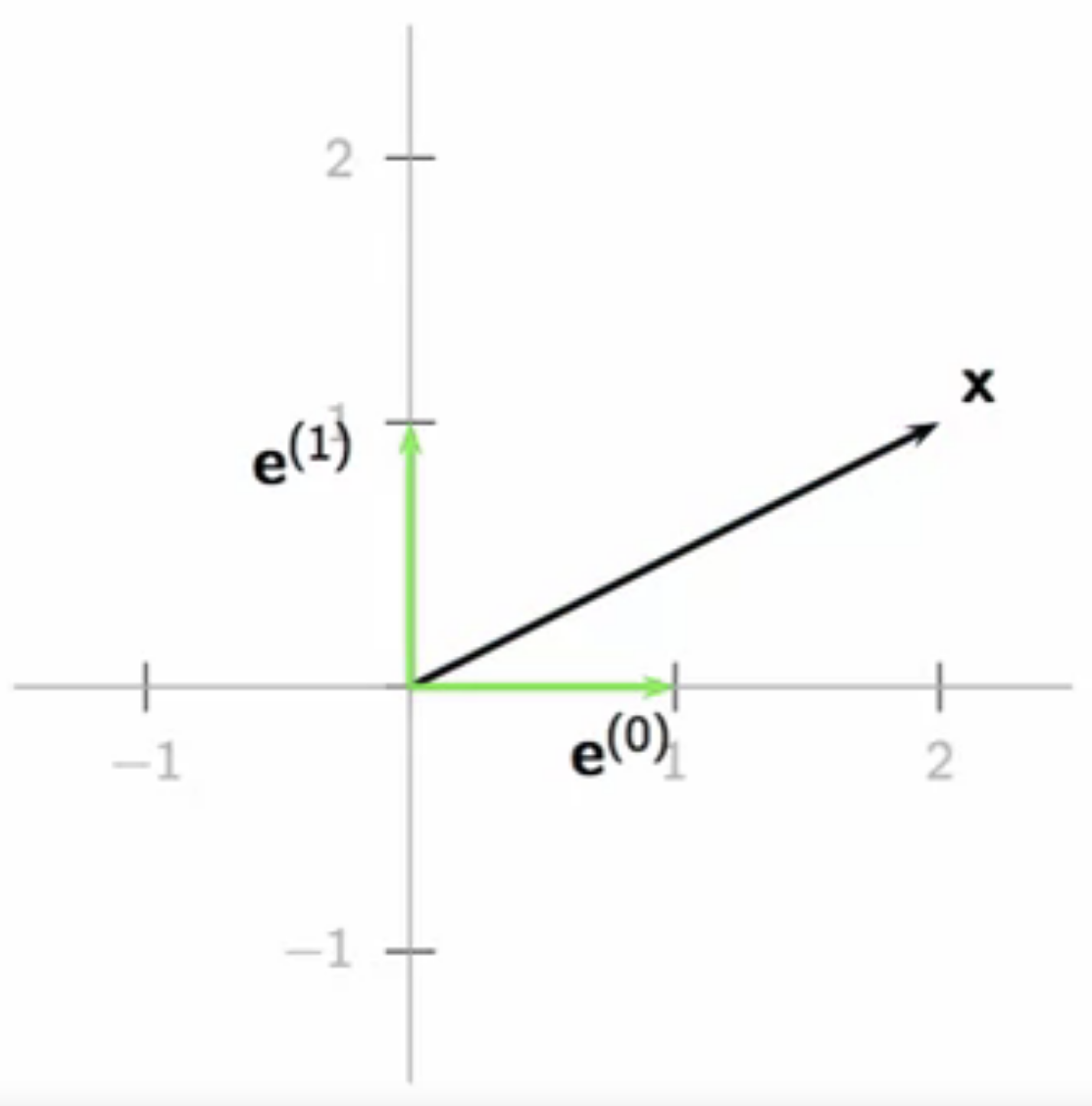

- canonical basis:

- this family of basis vectors is denoted by

- any vector can be expressed as a linear combination of ( \textbf{e}^k) in ( \mathbb{R}^2 )

- graphical example:

fig: linear combination of canonical ( \textbf{e}^k) in (\mathbb{R}^2)

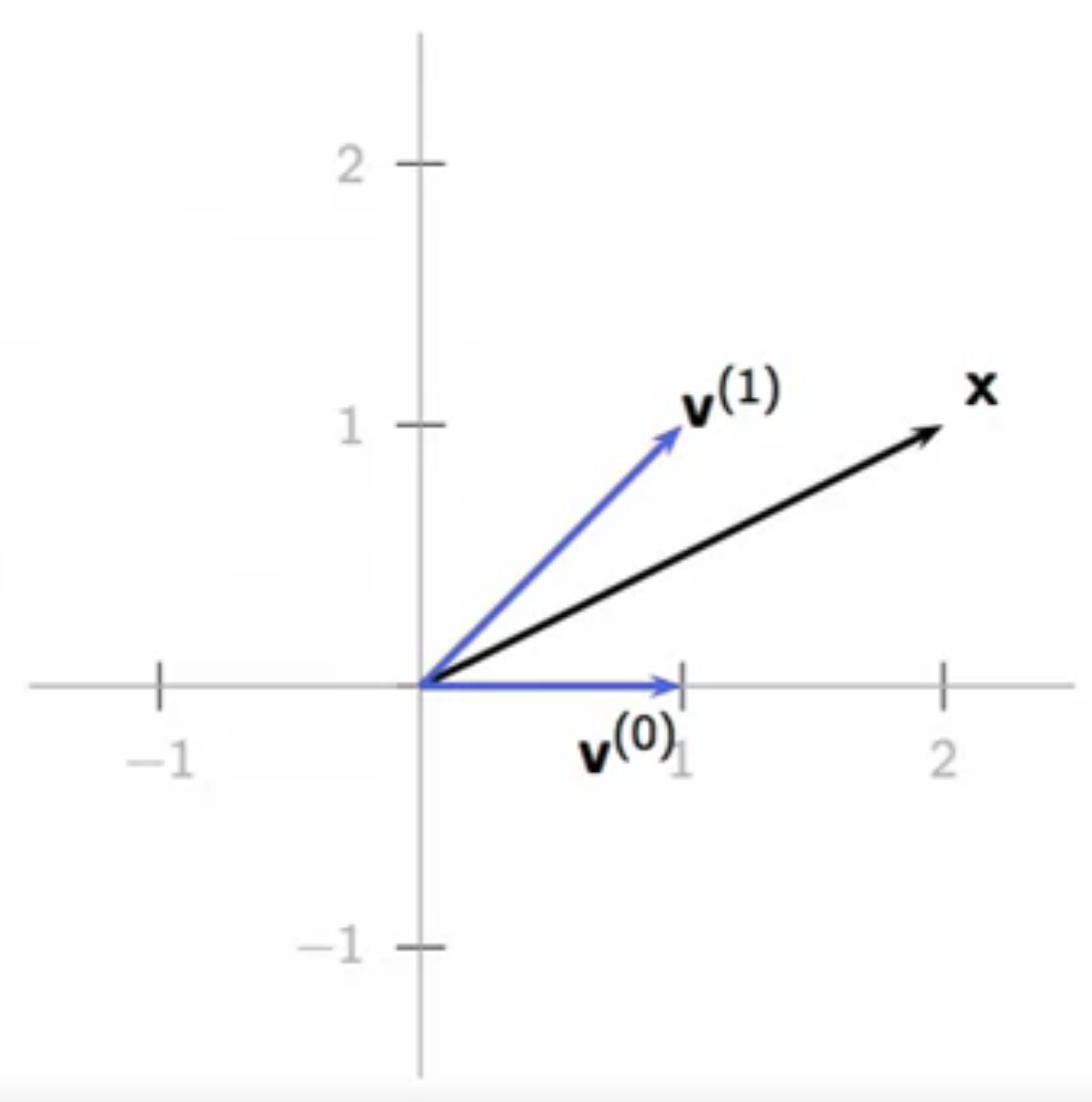

- non-canonical basis example:

- any vector can be expressed as a linear combination of these vectors in

- the coefficients of the bases will be different compared to the canonical bases

- graphical example:

-

- by rule of parallelogram vector addition

fig: linear combination of non-canonical in

- only vectors which are linearly independent can be the basis vectors of a space

- infinite dimensional spaces bases:

- some limitations have to be applied to obtain basis vectors of infinite dimension

- a canonical basis of - , -th position,

- function vector spaces:

- basis vector for functions:

- the fourier basis for functions over an interval : - - any square-integrable function in can be represented as a linear combination of fourier bases - a square wave can be expressed as a sum of sines

- formally, in a vector space ,

- a set of vectors from , is a basis for if:

`

- : ,

- the coefficients are unique

- this implies linear independence in the vector basis

- `

orthonormal basis

- the orthogonal bases are the most important

- of all possible bases for a vector space

- orthogonal basis: for

- vectors of an orthogonal basis are mutually orthogonal

- their inner product with each other is zero

- in some spaces, the orthogonal bases are also orthonormal

- i.e. they are unit norm

- their length

- the inner product of any two vectors in the orthonormal bases is the difference between their indices

- gran-schmidt algorithm can be used to orthonormalize any orthogonal bases

- obtaining the bases coefficients for bases can be involved and challenging

-

- : a vector as the linear combination of basis vectors ,

- with corresponding coefficients

- however, they are easy to obtain with an orthonormal basis

-

change of basis

-

- is the target basis, is the original basis

- if is orthonormal:

- this forms the core of the discrete fourier transform algorithm for finite length signals

- can be applied to elementary rotations of basis vectors in the euclidean plane

- the same vector has different coefficients in the original and the rotates bases

- the rotation matrix is obtained by the matrix multiplication of the original and the target bases

- the rotation matrix applied to a vector in the original bases yields the coefficients of the same vector in the rotated bases

- the matrix multiplication of the rotation matrix with its inverse yields the identity matrix

subspaces

- subspaces can be applied to signal approximation and compression

- with vector and subspace

- approximate with by

- take projection of the vector in on

- due to the adaptation of vector space paradigm for signal processing

- this geometric intuition for approximation can be extended to arbitrarily complex vector spaces

vector subspace

- a subspace is a subset of vectors of a vector space closed under addition and scalar multiplication

- classic example:

- in-plane vector addition and scalar multiplication operations do not result in vectors outside the plane

- uses only 2 of the 3 orthonormal basis of

- the subspace concept can be extended to other vector spaces

- : function vector space

- subspace: set of symmetric functions in

- when two symmetric functions are added, they yield symmetric functions

- : function vector space

- subspaces have their own bases

- a subset of their parent space’s bases

least square approximations

- orthonormal basis for

- orthogonal projection:

- the orthogonal projection: the “best” approximation of over - because of two of its properties - it has minimum-norm error: - - orthogonal projection minimizes the error between the original vector and the approximated vector - this error is orthogonal to the approximation: - - the error and the basis vectors of the subspace are maximally different - they are uncorrelated - the basis vectors cannot capture any more information in the error

- example: polynomial approximation

- approximating from vector space to

- i.e. vector space of square-integrable functions to a subspace of polynomials of degree

- generic element of subspace has form

- a naive, self-evident basis for this subspace:

- not orthonormal, however

approximation with Legendre polynomials

- example goal:

- approximate to

- : polynomials of the degree 2

- approximate to

- build orthonormal basis from naive basis

- use Gram-Schmidt orthonormalization procedure for naive bases:

- : original naive bases

- : orthonormalized naive bases

- this algorithm takes one vector at a time from the original step and incrementally produces an orthonormal set

-

- for the first naive basis vector, normalize it with 1

- project the second naive basis vector on to the normalized first basis

- then subtract this projection from the second basis vector to get the second normalized basis

- this removes the the first normalized basis’s component from the second naive basis

-

- normalize the extracted vector

-

- this process yields:

- and so on

- these are known as Legendre polynomials

- they can be computed to the arbitrary degree,

- for this example, up to degree 2

- use Gram-Schmidt orthonormalization procedure for naive bases:

- project over the orthonormal basis

- simply dot product the original vector over all the legendre polynomials i.e. the orthogonal basis of the subspace

-

- compute approximation error

- so using the orthogonal projection

- this subspace has only one non-zero basis:

- so using the orthogonal projection

- compare error to taylor’s expansion approximation

- well known expansion, easy to compute but not optimal over interval

- taylor’s approximation:

- in both cases, the approximation is a straight line, but the slopes are slightly different ( 10% off)

- the taylor’s expansion is a local approximation around 0,

- the legendre polynomials method minimizes the global mean-squared-error between the approximation and the original vector

- the error of the legendre method has a higher error around 0

- however, the energy of the error compared to the error of the taylor’s expansion is lower in the interval

- error norm:

- legendre polynomial based approximation:

- taylor series based approximation:

- legendre polynomial based approximation:

haar spaces

- haar spaces are matrix spaces

- note: matrices can be reshaped for vector operations

- encodes matrix information in a hierarchical way

- finds application in image compression and transmission

- it has two kinds of basis matrices

- the first one encodes the broad information

- the rest encode the details, which get finer by the basis index

- each basis matrix has positive and negative values in some symmetric pattern

- the basis matrix will implicitly compute the difference between image areas

- low-index basis matrices take differences between large areas

- high-index matrices take differences in smaller, localized areas

- this is a more robust way of encoding images for transmission methods prone to losses on the way

- if images are transmitted as simple matrices, they are prone to being chopped is loss in communication occurs during transmission

- haar encoding transmits coefficients not pixel by pixel but hierarchically in the level of detail

- so if communication loss occurs, the broad idea of the image is still conveyed

- while continued transmission will push up the detail level

- approximation of matrices to harr space is an example of progressive encoding